The simple approach (scaling down less than 2 times the size)

If the loaded image is to be scaled less than 2 times (400 -> 200) simply use the build in "smoothing" property of the Bitmap Class. This can be set directly on the Bitmap itself or via different DisplayElements such as the mx:Image in Flex. If you set this property to true the Bitmap will use bilinear scaling, achieving quite good results.

|

| Grayscale bilinear interpolation |

Scaling down more than 2 times

Let's say that you have an image that is quite large and you want to create a thumbnail from it. A thumbnail is often way smaller than 2 times smaller than the original image. It would be quite nice to use the above method to just scale the image, or better resample the image with the "smoothing" property set to true. However, if you try to do this the FlashPlayer will simply fail and default back to using the "nearest neighbor" algorithm. If you try it you'll soon discover that this will give you a horrible result.

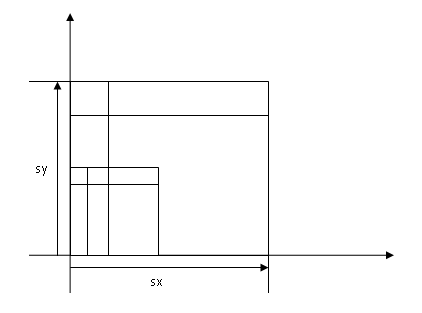

What you'll need to do in this case is to do an iterative or recursive resampling of the image at hand. In layman terms this means resampling the image several times, each time no more than 2 times downscaling factor. You could call this a multi pass resampler (actually it would be a multi-multi pass resampling as the draw() method is also multi pass). You would still use the build in "BitmapData.draw()" method to accomplish this, you only divide the work into multiple jobs. This will give you a much better result. Note that in my experience, function calls in ActionsScript 3 are quite expensive, hence a iterative approach would maybe be preferable to a recursive one.

Using PixelBender

If you have decided to go for the manual approach of resampling the picture, you can use a PixelBender shader to do the job. In this case the actual resampling will be a lot faster (as the PB language is based on C), however applying the PB shader and passing the image back and forth takes time. See the speed section..

Combining the two

So, what if you have an application and you don't know in advance the downscale factor. Well, then you'll need to use both of the approaches like so:

- Calculate the scale factor

- If the scale factor is less than 2, set "smoothing" to true on the container or do a singe pass resampling.

- If the scale factor is more than two, use the multi pass scaling approach. And set the "smoothing" property of the container to false (else it will be blurry).

Let's say that you have an image that is 1200 x 1200 pixels and you're resampling it to 200 x 200. This is a 6 times resample and will look like ass using only the "smoothing" property, but it is faster.

Let look at some hard results. In this test we see how much time is used extra compared to no "nearest neighbor" scaling (default in Bitmaps if smoothing is off).

Let look at some hard results. In this test we see how much time is used extra compared to no "nearest neighbor" scaling (default in Bitmaps if smoothing is off).

| Method | Time in ms |

|---|---|

| Smoothing property on | 0 ms |

| Multi pass resample | 9 ms |

| Multi pass PixelBender | 32 ms |

Using the smoothing property takes no extra time, because it fails and defaults back to nearest neighbor. The other two are both pretty fast, however even though the PB algorithm is way faster than the others, we see that the applying of the shader eats a lot of time, thereby making the multi pass resampler method the way to go on a large rescaling operation.

2 comments:

I am having this issue myself and am interesting in your approach. I've tried several I've found online with no luck.

Can you elaborate on your pixelbender workflow? Or maybe do you have any sample code?

The iterative scaling works great, or not great, but it works. As for the Pixelbender stuff, have a lokk at this post: http://www.brooksandrus.com/blog/2009/03/11/bilinear-resampling-with-flash-player-and-pixel-bender/

Post a Comment